Ethics 2.0: Why Machines Should Learn to Make Difficult Decisions

Machines are now able to teach themselves, and they can handle large data more confidently than a human can. So why not leave the most difficult decisions up to them? At the most recent Future Foundation Technology Ethics Conference, HSE Associate Professor Kirill Martyonov talked about how humans are helping robots understand what is good and what is not, and he also discussed the dangers associated with the development of artificial intelligence.

‘How does, or how can, modern technology change society? We are already seeing how communication has been influenced by the development of the internet, specifically the mobile internet. These changes have not yet been reflected upon in any way. In other words, people are now seeing that the entire sum of all human knowledge is now accessible from anyone’s pocket and from any gadget that the average teenager can get his or her hands on. And people are not touched or affected by this in any way; they all believe that this is how it has always been and that no serious cultural changes have taken place.

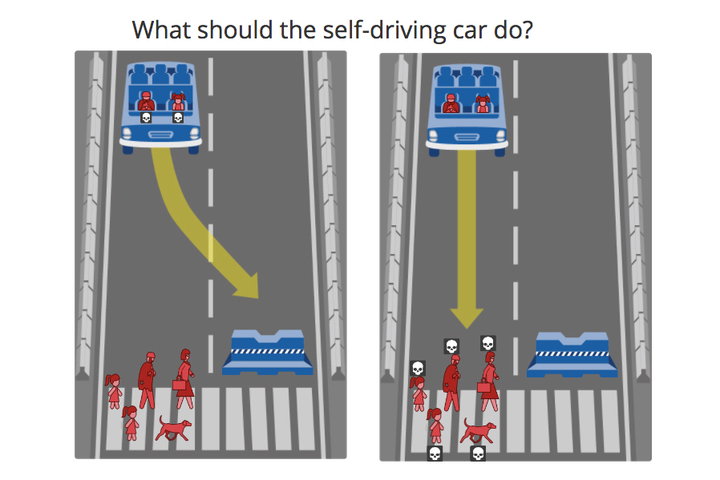

‘When we talk about the technological future – more specifically the future where logical machines, or at least autonomous machines, are capable of acting in a complex environment – we are constantly falling into the trap imposed on us by mass culture. I googled the term ‘war machines,’ and Google gave me more than 60 million results. In talking about what the future will look like, people always return to the ideas presented in the movie Terminator. A second scenario has now been added to the mix though – even if we aren’t going to battle machines, then we are told that machines will take all of our jobs. This pulls us away from the real problems.

‘A good example of a real problem is the idea described in Yuval Noah Harari’s book Homo Deus: A Brief History of Tomorrow. Harari’s thesis is quite radical – the future we have to prepare for involves both the end of a person’s real uniqueness as a subject and the end of our belief that only people can have this unique subjectivity. In other words, though human history and human culture have only featured people as the actors (humans as soldiers, as voters, as economic agents) thus far, the future will include humans, as well as other systems that will be able to make decisions and act within the framework of the real world, having changed social reality. And this is a real risk. This is something much more serious than primitive fantasies about war between humans and machines.’

The full text of the lecture can be found on T&P’s website.

Kirill K. Martynov