Attention Priority Map Explains Unusual Visual Search Phenomena

Researchers from HSE University and Harvard have found that the grouping of multiple elements in a visual display does not affect the search speed for an element with a unique combination of features. The Guided Search theory predicted such results. The study is published in the Journal of Vision.

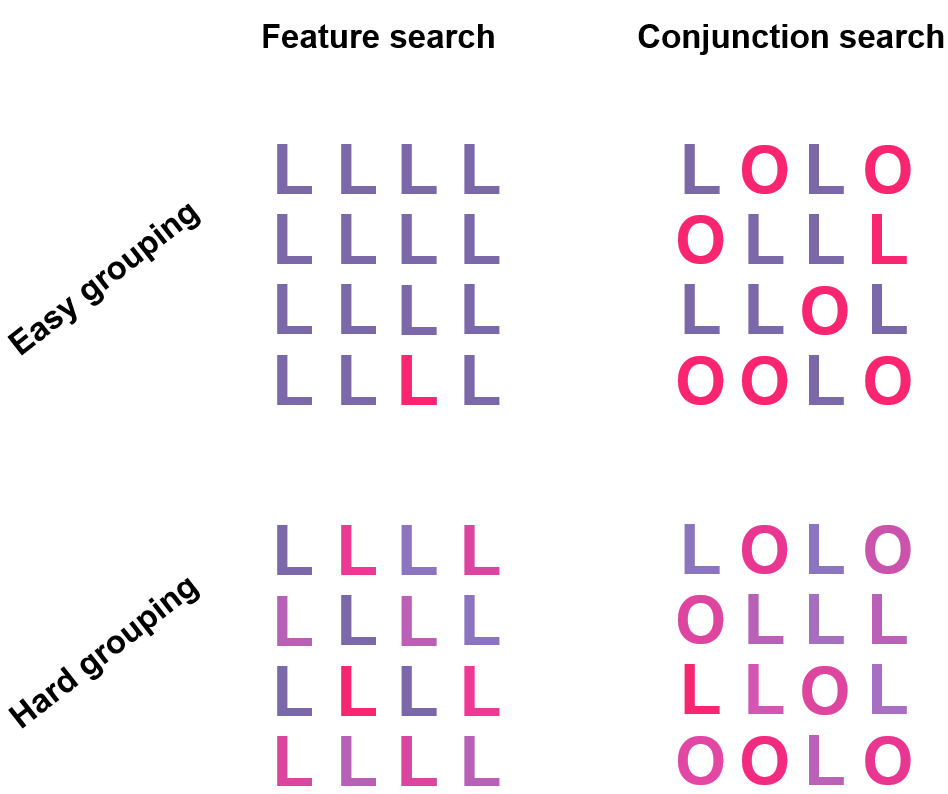

Visual search is quite a common task in everyday life. It isn’t difficult, for example, to find an object with a unique feature, such as a red letter (the target) among a number of blue letters (the distractors). This is called a feature search. It is more difficult to find an object that differs from others by a combination of features — for example, a red letter 'L' among red letters ‘O’ and blue letters ’T'. In such situations, you perform a conjunction search.

Conjunction Search Theories

Scientists have long been known that the speed of searching for a target with a single feature depends on how easy it is to group the objects in a display. When the letters are clearly divided into two color categories (upper left image), the red element immediately grabs attention (“pops out”) among the blue elements - because all blue elements are clearly grouped together and the red one stands apart. However, if the letters have various shades of color from red to blue (lower left image), it is more difficult to find the red letter, because some distractors are now ready to be grouped with the target and because some of the other distractors are intermediate between red and blue.

Intuitively, it would seem that in search for a combination of features, a particular element could be found faster in clear grouping (upper right image). This is the result predicted by search theories with a sequential mechanism of selection, which posit that the search for a combination of features is a two-step process. When letters are grouped easily by color, you first select all the red letters and search only for differences in shape, making the task twice as easy. However, if the collar are not easily grouped into two colors, it would be more difficult to distinguish between groups and the search would take longer.

In contrast, a search theory with a simultaneous mechanism of selection—namely, the Guided Search theory developed in the late 1980-s by one of paper’s authors, Jeremy Wolfe—proposes just the opposite. It argues that information about all relevant features is used simultaneously when directing one’s attention towards a potential target. That is, if you are looking for a red letter, your visual system will simultaneously activate locations with both red letters and letters of a certain shape. Thus, your attention will focus first on those places where both the color and shape are as similar to the goal as possible.

Verifying Theories by Experiment

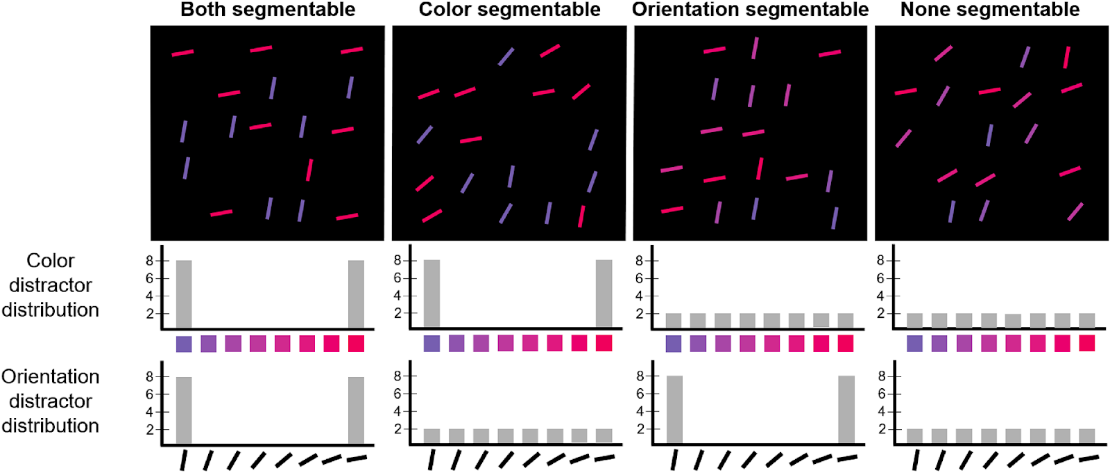

The scientists conducted a series of experiments to test the predictions of these two theories. In the first, subjects were asked to search for a line of a particular color and orientation among other lines — for example, the steep red line in the pictures below. The difficulty of categorizing lines by their features varied among the trials: in some trials, the lines were clearly grouped by color and orientation, while in others, the differences in color and orientation were less distinct, making it more difficult to divide them into clear groups.

The sequential mechanism predict that subjects can find the target faster when the grouping of lines is easy (the leftmost picture) and that they will have increasing difficulty as the stimuli become more variable and thus harder to group (the rightmost picture). However, the subjects completed the task with an identical speed for all four types of images. This result is surprising because not only is it counterintuitive, but also earlier research demonstrated that grouping influences the speed of searches for a single feature.

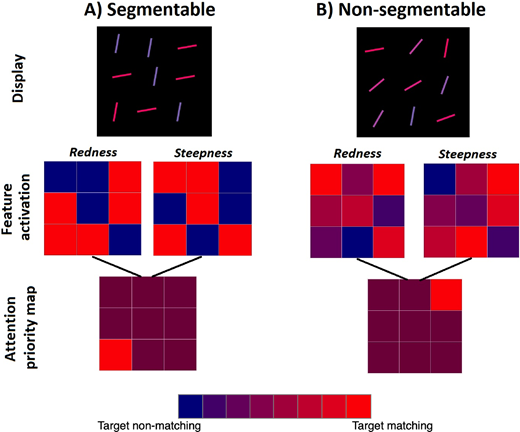

The Attention Priority Map as a Main Search Controller

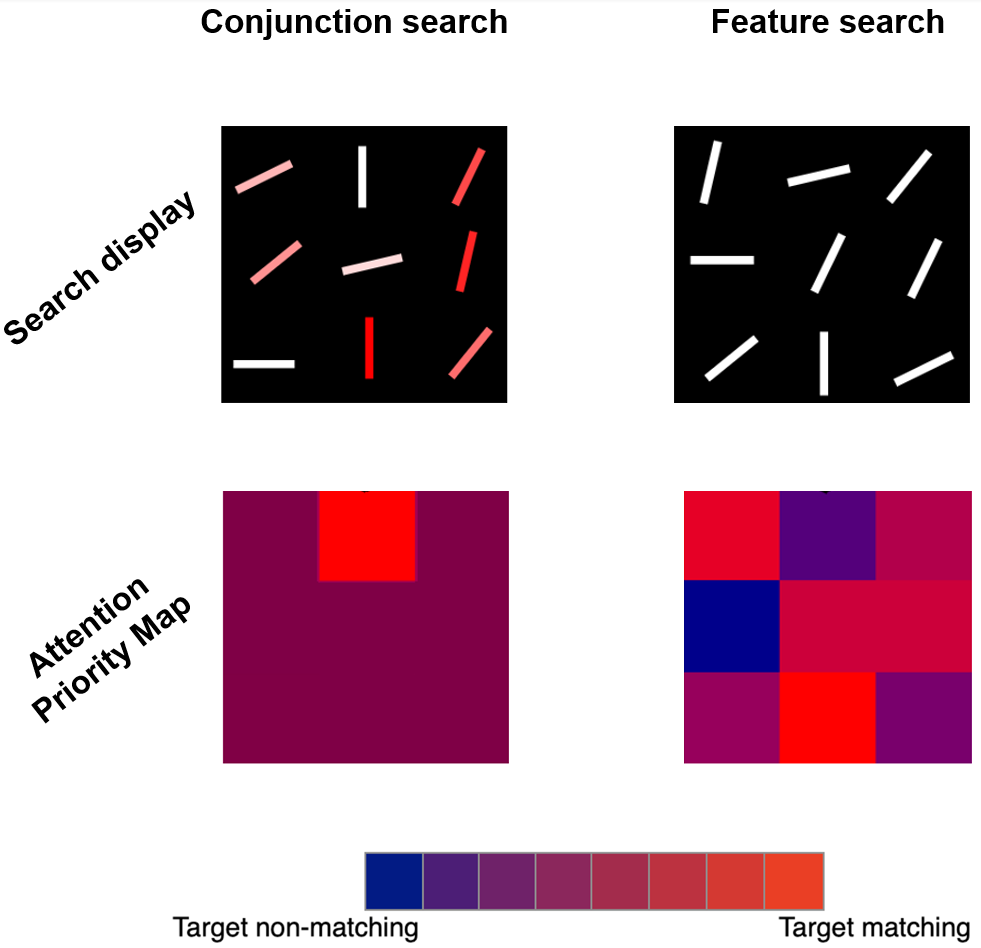

This is explained by the search theories with a simultaneous selection mechanism, and particularly Guided Search theory. The distribution of features over the display automatically produces a sort of maps of feature activation (e.g. color maps or orientation maps), in which the activation level of at each location indicates the similarity between a feature presented at that location and that of the target. The outputs of these two Feature maps are then summed up on the Attention Priority Map whose activation defines how likely each area contains all target features. In other words, attention would likely first go to wherever activation is highest. This mechanism makes it equally easy to pay attention to a target regardless of the complexity of grouping, because there is only one place having both target features at once.

To further test the proposed explanations, the researchers conducted another experiment where, in addition to search for a feature combination, subjects were asked to search for a line based on only one feature, its unique orientation or color. It turned out that in the poor grouping conditions, searching for a combination of features (for example, a white vertical line among lines of different colors and orientations) can be faster than searching for a single feature (for example, a white line among lines of different colors or a vertical line among lines of different orientations).

Seemingly, this does not fit into the visual search theory at all, and even violates one of its ‘laws’ — namely, that the search for a single feature should be easier than a search for a combination of features. However, the attention priority map hypothesis of Guided Search provides an explanation for this unusual finding: when searching for a single feature, many parts of the map show similar levels of activation, whereas when searching for a combination of features, the location containing the target object has the highest level of activation.

Vladislav Khvostov, one of the study’s authors

Everyone does a lot of visual searches every day. It is very rare that the object we are looking for has one unique feature: more often, it has a certain combination of several features. In this study, we have demonstrated that the search for a combination of features works just as efficiently, regardless of how good the grouping of the elements is. This is made possible by building an attention priority map that collects the information about several features simultaneously.

Vladislav Khvostov

Junior Research Fellow, Laboratory for Cognitive Research

See also:

Scientists Identify Personality Traits That Help Schoolchildren Succeed Academically

Economists from HSE University and the Southern Federal University have found that personality traits such as conscientiousness and open-mindedness help schoolchildren improve their academic performance. The study, conducted across seven countries, was the first large-scale international analysis of the impact of character traits on the academic achievement of 10 and 15-year-olds. The findings have been published in the International Journal of Educational Research.

‘Scientists Work to Make This World a Better Place’

Federico Gallo is a Research Fellow at the Centre for Cognition and Decision Making of the HSE Institute for Cognitive Research. In 2023, he won the Award for Special Achievements in Career and Public Life Among Foreign Alumni of HSE University. In this interview, Federico discusses how he entered science and why he chose to stay, and shares a secret to effective protection against cognitive decline in old age.

The Brain in Space: Investigating the Effects of Long Spaceflights on Space Travellers

As part of an international project conducted with the participation of Roscosmos and the European Space Agency, a team of researchers used differential tractography to analyse dMRI scans ofcosmonauts’ brains and found significant changes in brain connectivity, with some of the changes persisting after seven months back on Earth. The paper is published in Frontiers in Neural Circuits.

‘All of the Most Interesting Research Today Happens at the Borders between Different Disciplines’

The Russian Ministry of Education and Science has approved a new nomenclature of specializations in which academic degrees are conferred in Russia. The new list includes 21 new fields, including cognitive science. Maria Falikman, Head of the HSE School of Psychology, discusses the history of cognitive science, its formation at HSE, and its prospects for development.

‘In the Blink of an Eye’ Statistics: People Estimate Size of the Set of Objects Based on Distance to Them

HSE University researchers Yuri Markov and Natalia Tiurina discovered that when people visually estimate the size of objects, they are also able to consider their distance from the observer, even if there are many such objects. The observers rely not only on the objects’ retinal representation, but also on the surrounding context. The paper was published in the journal Acta Psychologica.

Experiment Shows How Our Visual System Avoids Overloading

Russian researchers from HSE University have studied a hypothesis regarding the capability of the visual system to automatically categorize objects (i.e., without requiring attention span). The results of a simple and beautiful experiment confirmed this assumption. The paper was published in the journal Scientific Reports. The study was supported by a Russian Science Foundation grant.

Attention and Atención: How Language Proficiency Correlates with Cognitive Skills

An international team of researchers carried out an experiment at HSE University demonstrating that knowledge of several languages can improve the performance of the human brain. In their study, they registered a correlation between participants’ cognitive control and their proficiency in a second language.